Latest in: Press

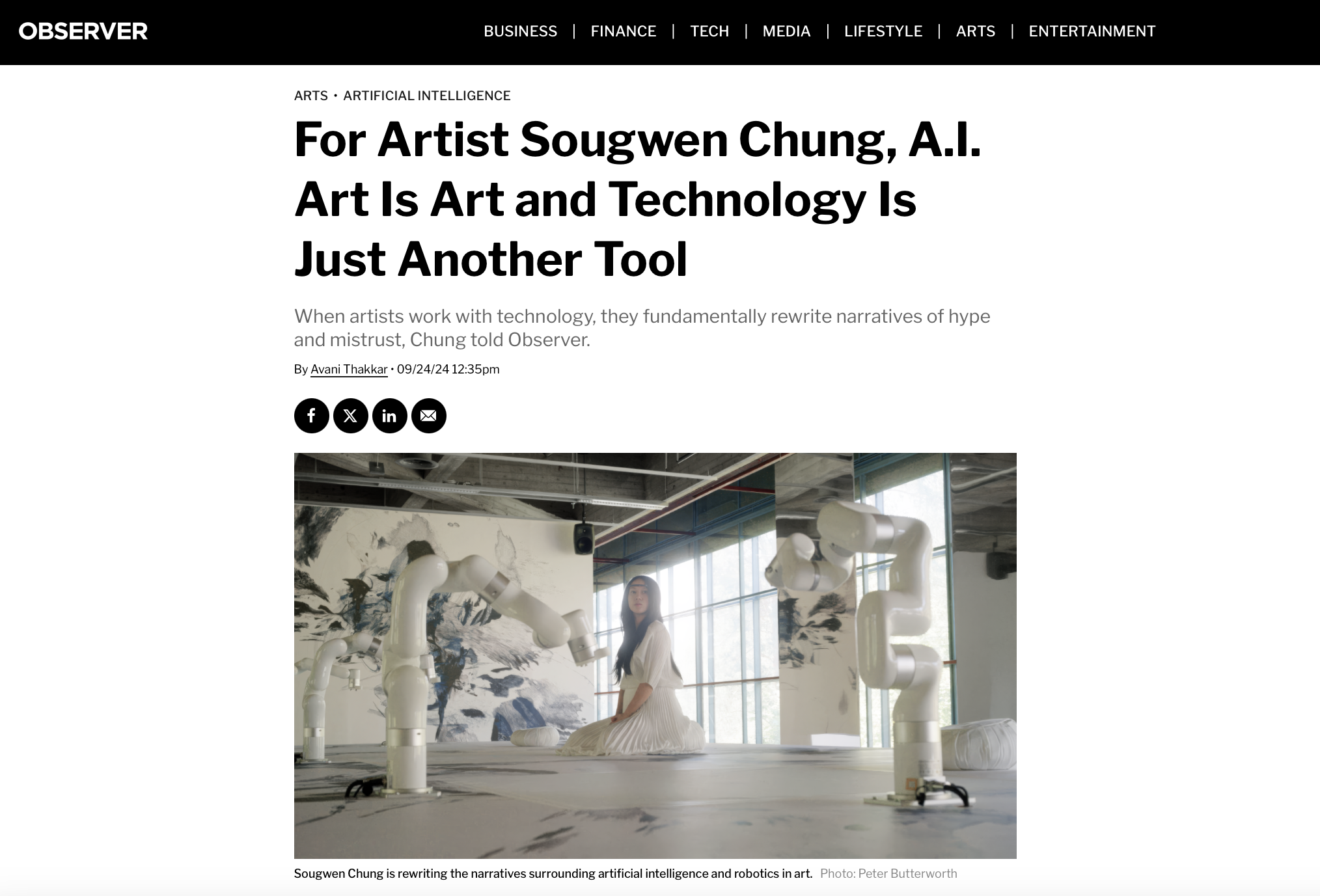

When artists work with technology, they fundamentally rewrite narratives of hype and mistrust, Chung told Observer. It’s not every day you witness robotic arms with brushes in hand, painting in harmonious tandem alongside a …

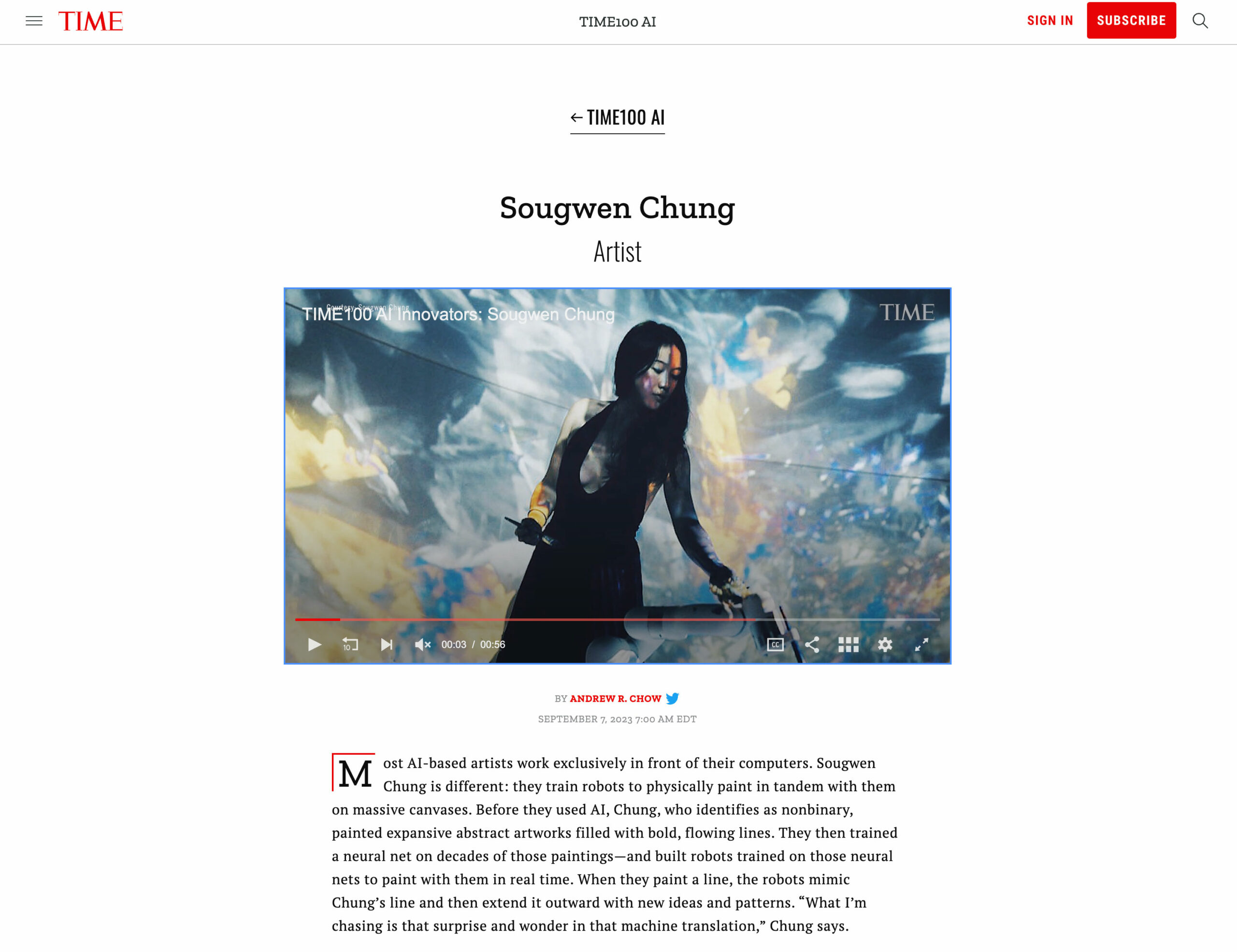

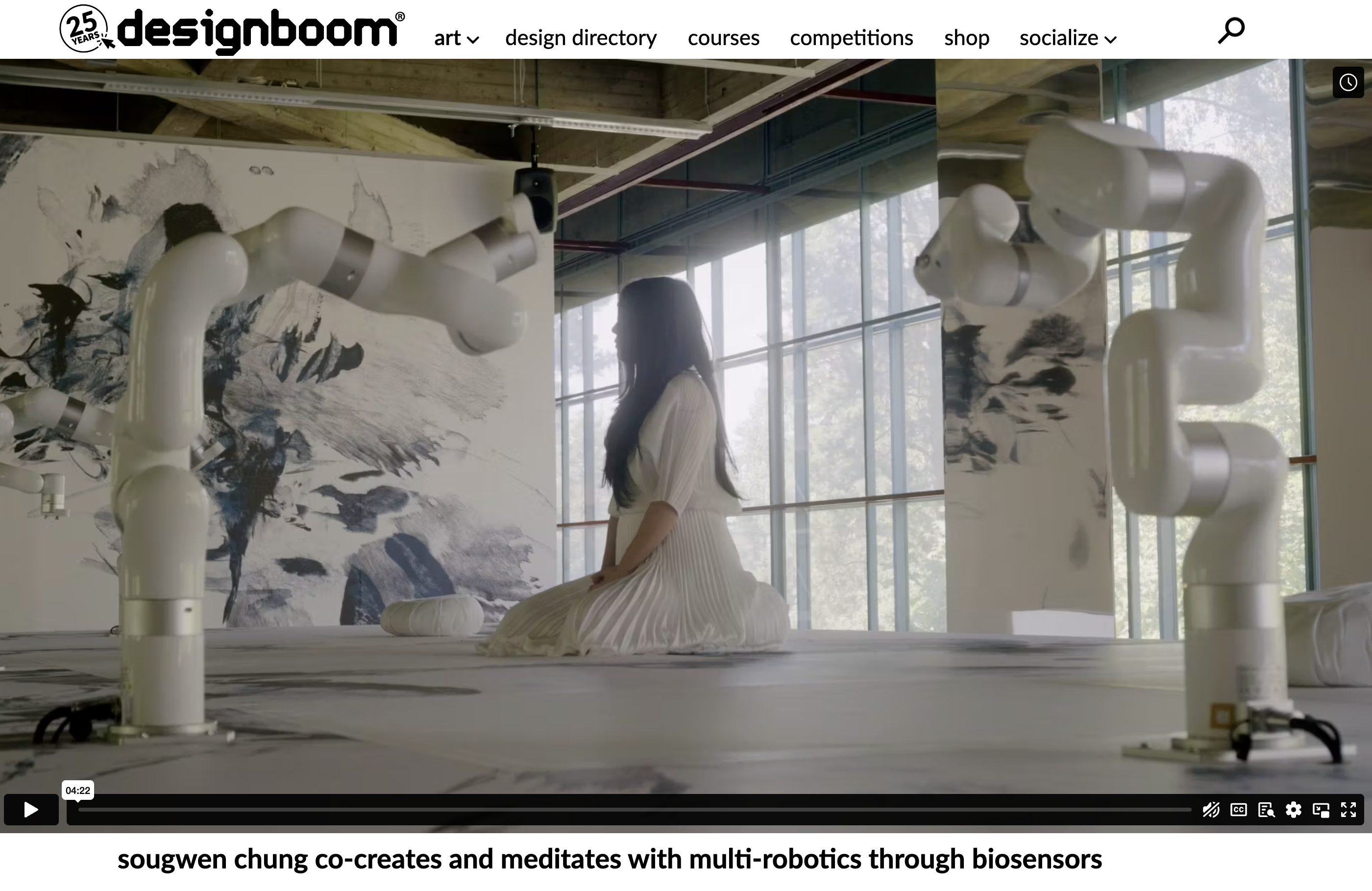

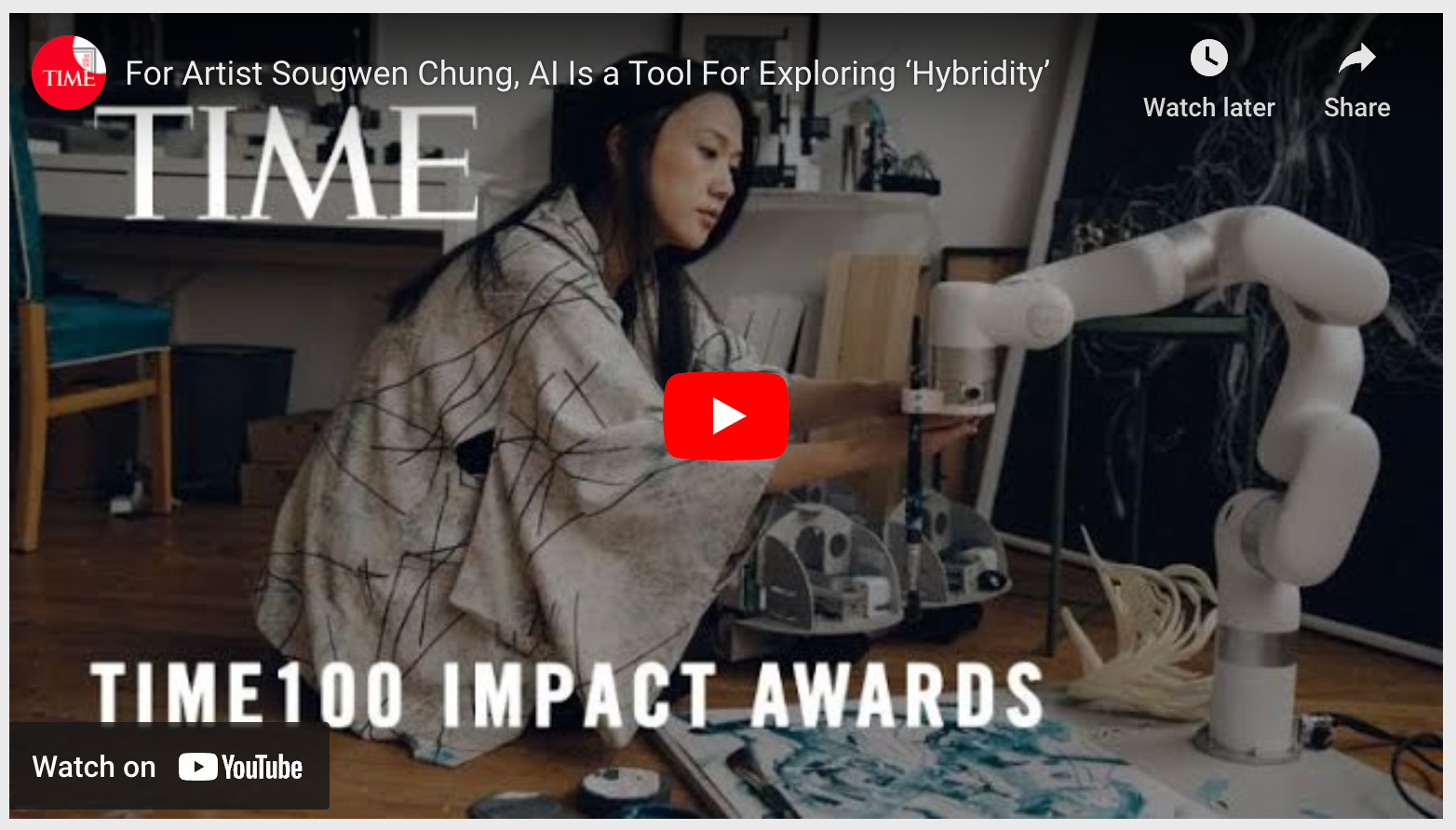

Most AI-based artists work exclusively in front of their computers. Sougwen Chung is different: they train robots to physically paint in tandem with them on massive canvases. Before they used AI, Chung, who identifies …

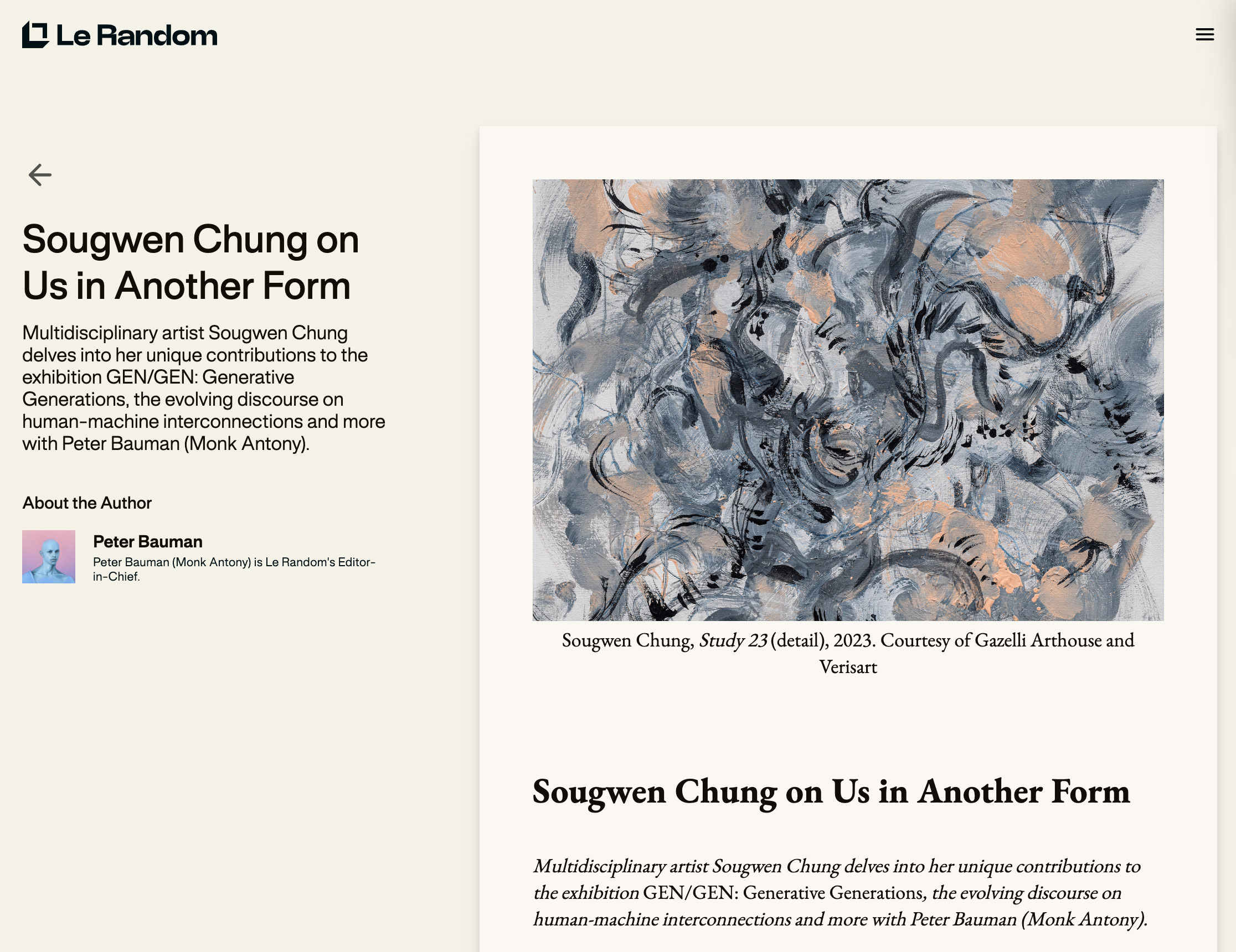

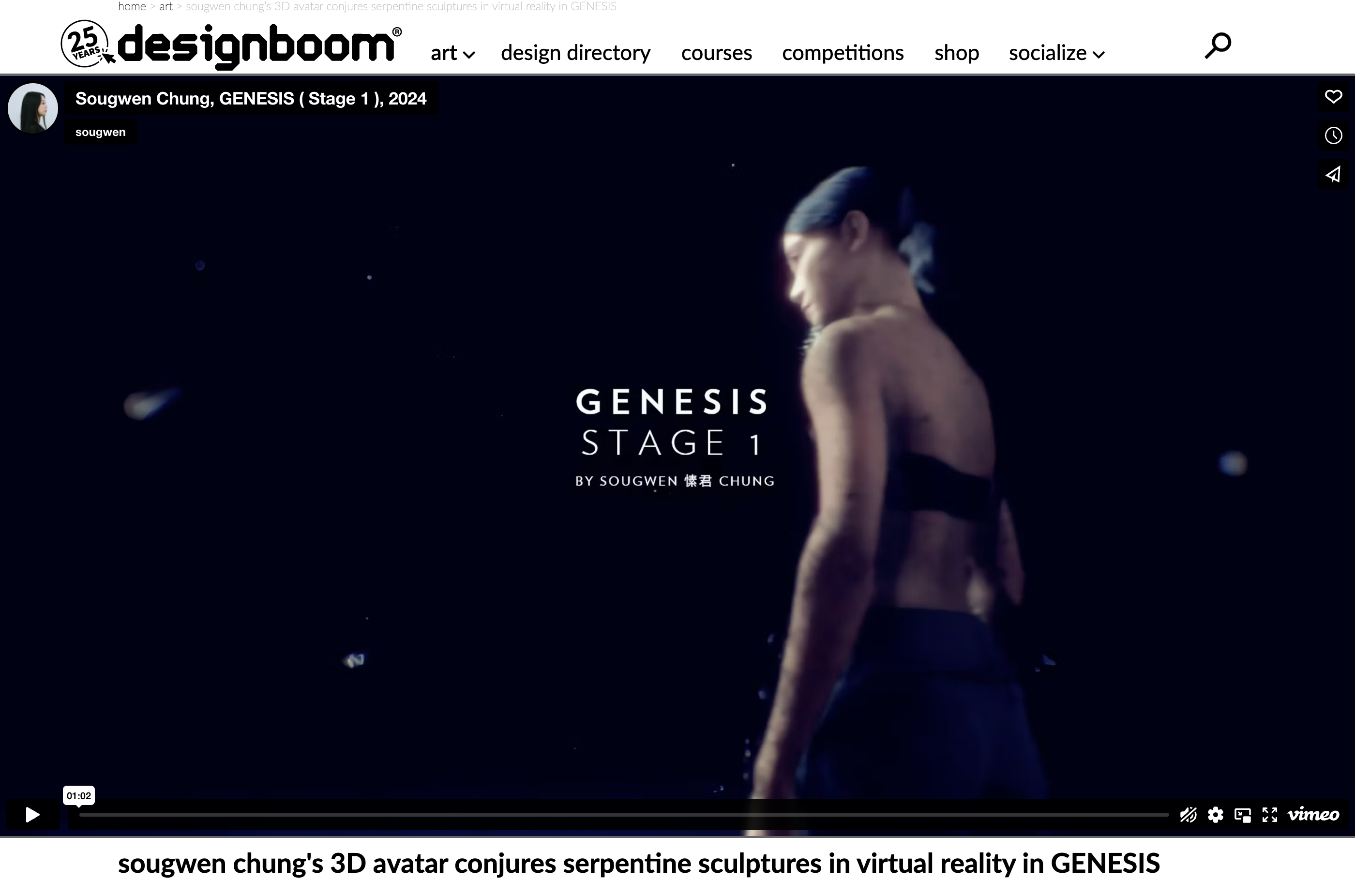

Multidisciplinary artist Sougwen Chung delves into her unique contributions to the exhibition GEN/GEN: Generative Generations, the evolving discourse on human-machine interconnections and more with Peter Bauman (Monk Antony). link

With his art-making software dating back to the 1960s, the programmer and artist paved the way for today’s confluence of art and technology Is artificial intelligence a tool to be used by humans to …

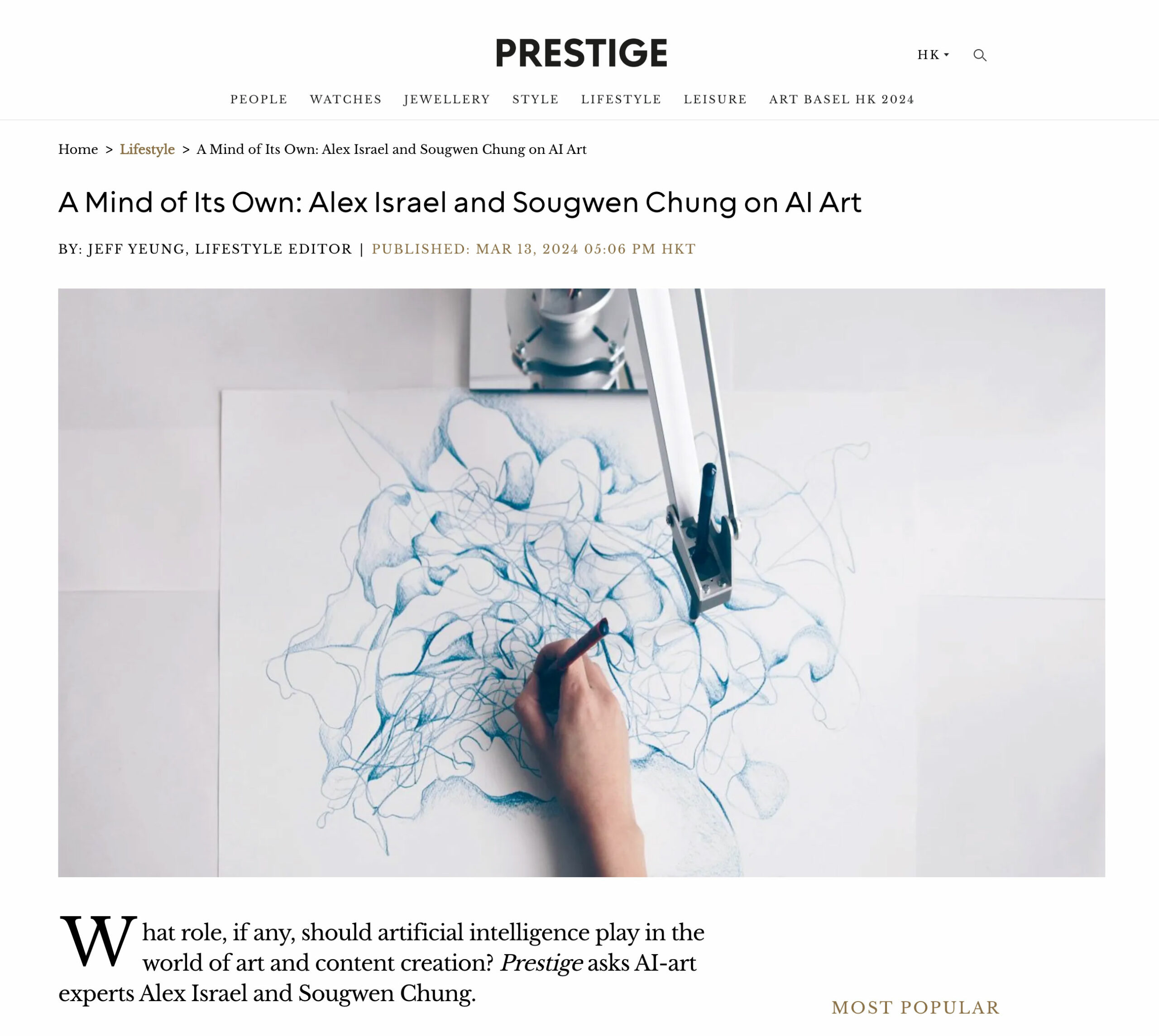

What role, if any, should artificial intelligence play in the world of art and content creation? Prestige asks AI-art experts Alex Israel and Sougwen Chung. As soon as artificial intelligence become easily accessible through the likes of …

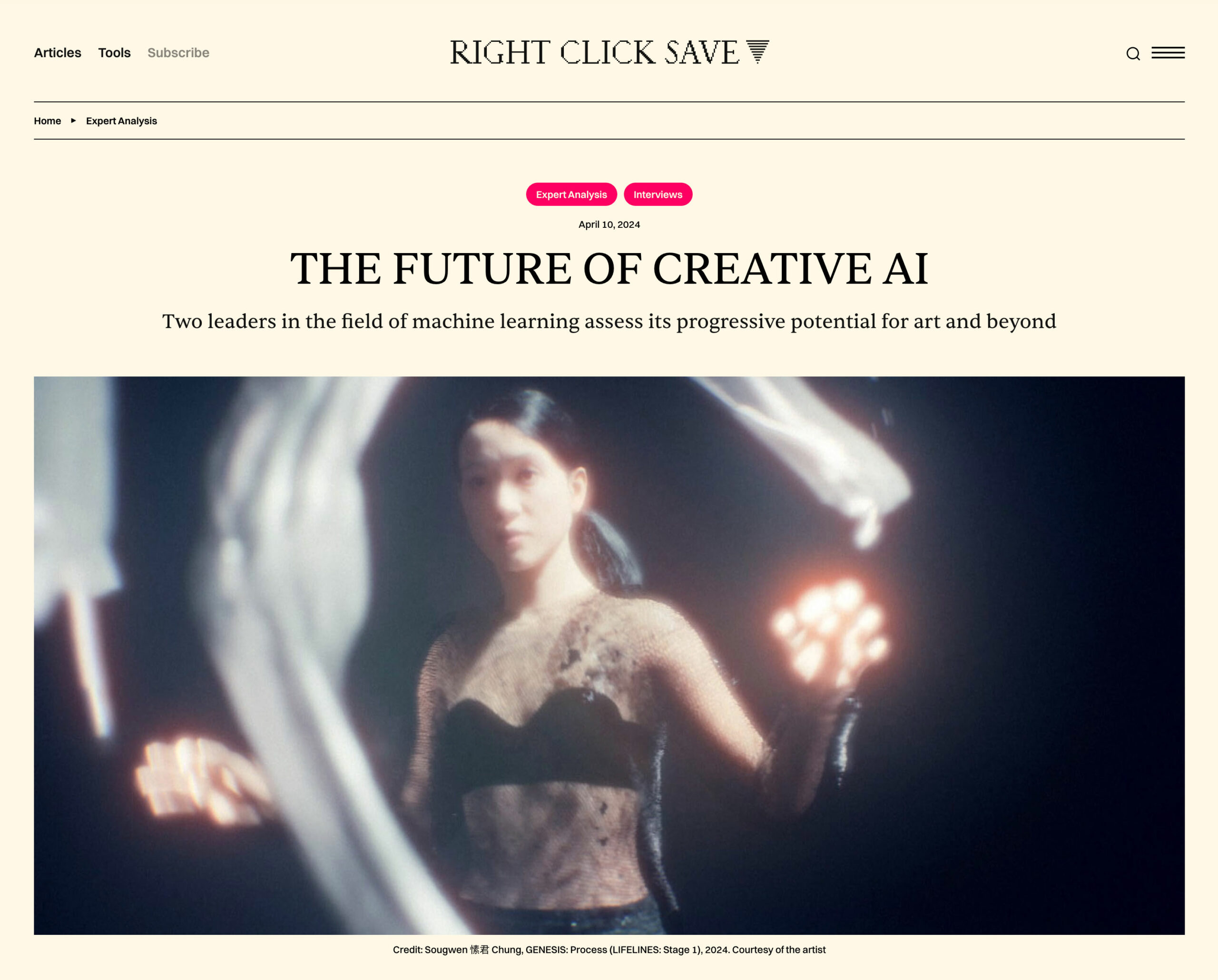

Two leaders in the field of machine learning assess its progressive potential for art and beyond For over a decade, Mick Grierson has been leading research into creative applications of AI. As a co-founder …

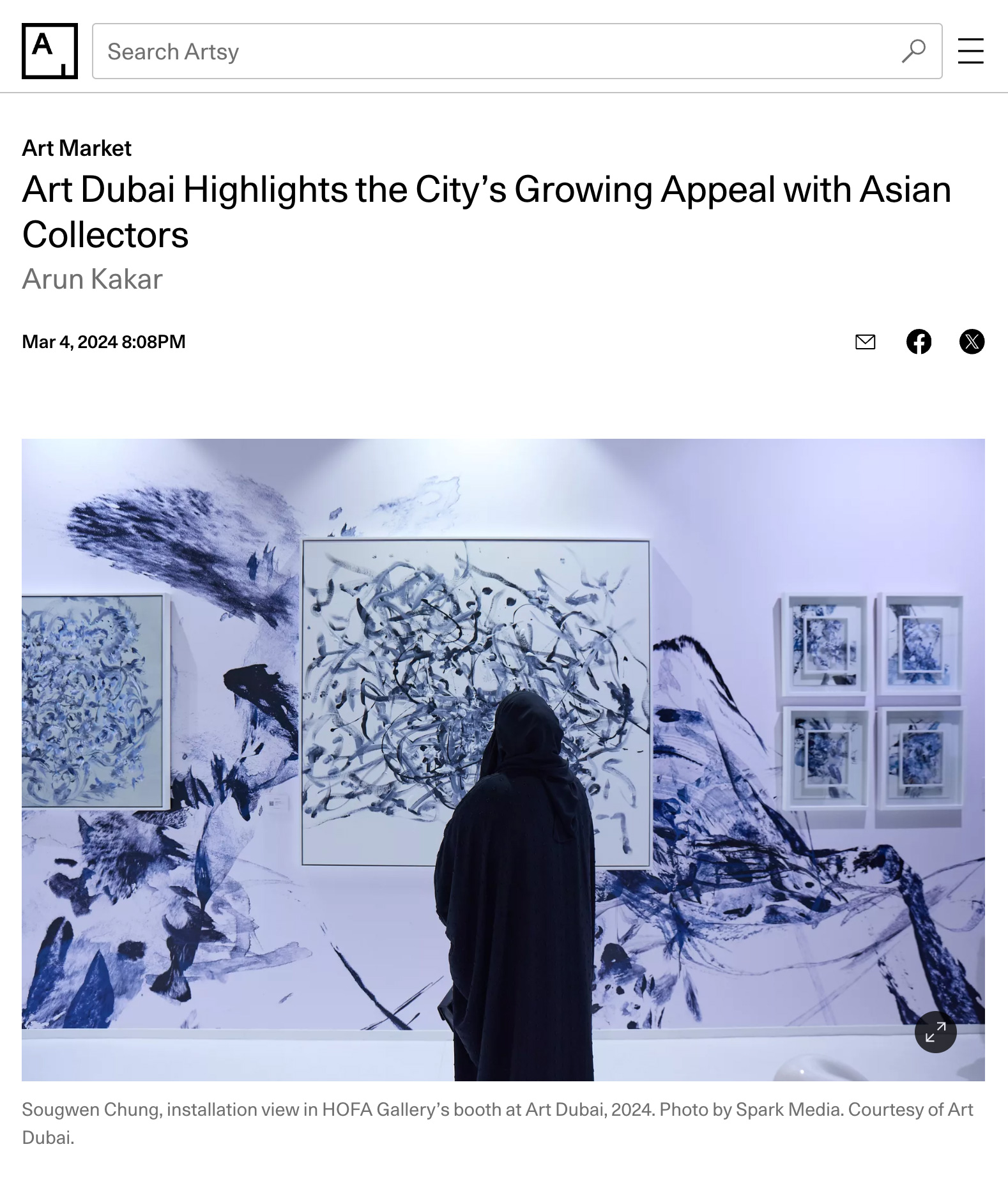

Arun Kakar Mar 4, 2024 8:08PM Read the article here

A pioneer in the realm of human-machine collaboration, Chinese-Canadian artist and researcher Sougwen Chung has long explored the mark-made-by-hand and the mark-made-by-machine to delve into the dynamics between humans, AI systems, and robotics.

“My work, at its simplest level, is about exploring the contradictions that stem from not fitting neatly into one category,” Sougwen Chung, who identifies as nonbinary, said on Sunday in Dubai, where they accepted …